Disclaimer – if you do find that anything here is inaccurate, or something obvious is missing, then hit me up on Twitter – @303sec

Unicode is interesting. It is confusing, and constantly evolving, which is really cool from a security research perspective. Loads of interesting attacks exist using encoding issues – is sometimes possible to bypass WAFs or filters with Unicode and encoding based techniques, as well as finding weird and wonderful logic / normalisation bugs. There are loads of different methods of encodings strings that you may have seen before:

%u0048

\u0048

%E2%99%A5But we’re getting ahead of ourselves – what even is Unicode? How is it different from beloved ASCII?

Unicode – The Basics

I recommend reading through the ‘Joel on Software’ blog post, which will give you a much clearer introduction to Unicode than I’m going to give, but the tl;dr is that there are some common misconceptions on what Unicode even is.

Unicode does not work in the 7 bit, 127 characters ASCII way, because there is a lot of complexity with language. Some languages are read in different directions – some are runic symbols – some are literally emojis with slight variations. Considering that language and symbology is constantly evolving, we’ll need to add new symbols all the time – and what happens when we run out of space in our new map?

Unicode handles this by abstracting encoding away from the process and using ‘code points’ rather than mapping characters to memory. So ‘Hello’, in Unicode, is simply:

U+0048 U+0065 U+006C U+006C U+006F

These are just points mapped to the already defined Unicode map. (see http://www.unicode.org/). No memory mapping to character stuff has happened yet, because that’s what encoding is for.

A common misconception is that ‘Unicode’ is simply a 16-bit code where there are 65,536 possible characters, rather than ASCII’s 7-bit 128 possibles . This is not accurate, for a few reasons. The first incorrect assumption here is that Unicode is not even an encoding type – just the ‘platonic ideal’, the specification/map for the encoding, whereas the encoding is what does the real legwork.

The second assumption is that encoded Unicode just adds an extra byte (and a bit) to the characters, but UTF-8 (Unicode Transformation Format) does not quite work like that. UTF-8 is the most common encoding method for Unicode, and is practically the standard on the web. The UTF-8 website describes it like this:

“UTF-8 encodes each Unicode character as a variable number of 1 to 4 octets, where the number of octets depends on the integer value assigned to the Unicode character. It is an efficient encoding of Unicode documents that use mostly US-ASCII characters because it represents each character in the range U+0000 through U+007F as a single octet.”

What this means to non-robots is that UTF-8 is an encoding scheme that can be read as good old ASCII in the first octet, but then we’re also free to use extra memory for cool characters like ⺨if and when we need to, without spending loads of memory on all that empty space because it’s just a clever encoding.

So this is why, when looking at wrongly encoded, pages we sometimes get some real text with these characters – ������� – these are beyond the printable range of Latin-1/ASCII, which means that they are probably random Unicode parts that are being wrongly decoded.

One other thing worth mentioning here is that Unicode is constantly changing and improving, and the versioning becomes important because you might end up making some assumptions with certain functions – we’ll see this with the first example.

Unicode Normalization Account Takeover

It could be possible to takeover an account by using Unicode equivalent characters to register the same username, and after the process of normalization this could lead to the same username being registered twice.

This first case-study vulnerability is an account takeover in Spotify. By registering a user, for example ‘ᴮᴵᴳᴮᴵᴿᴰ’, you could takeover the account ‘bigbird’, due to a bug in the functionality designed to canonicalize the username (as in, ignoring cases & whitespace). This is the basic flow of the attack, which I’ve shamelessly copy/pasted directly from Spotify’s excellent post-mortem:

- Find a user account to hijack. For the sake of this example let us hijack the account belonging to user bigbird.

- Create a new spotify account with username ᴮᴵᴳᴮᴵᴿᴰ (in python this is the string u’\u1d2e\u1d35\u1d33\u1d2e\u1d35\u1d3f\u1d30′).

- Send a request for a password reset for your new account.

- A password reset link is sent to the email you registered for your new account. Use it to change the password.

- Now, instead of logging in to account with username ᴮᴵᴳᴮᴵᴿᴰ, try logging in to account with username bigbird with the new password.

- Success! Mission accomplished.

They were implementing XMPP’s nodeprep canonicalization method which should have been sufficient here, but for some reason it wasn’t… In their writeup, they state that this is roughly the code used, which should by all accounts prevent this bug but didn’t:

from twisted.words.protocols.jabber.xmpp_stringprep import nodeprep

def canonical_username(name):

return nodeprep.prepare(name)XMPP nodeprep is specified in http://tools.ietf.org/html/draft-ietf-xmpp-nodeprep-03 and it clearly says there that it is supposed to be idempotent and handles unicode names.

So… what happened?

Initially, when resetting the password for ‘ᴮᴵᴳᴮᴵᴿᴰ’, the canonical_username was applied once – so the email to send the password reset to got sent to the address associated with the newly created account with canonical username ‘BIGBIRD’.

However, when the link was used, canonical_username was once again applied, yielding ‘bigbird’ so that the new password was instead set for the ‘bigbird’ account. The mistake was that Spotify were relying on nodeprep.prepare being idempotent (as in, making the same function call with the same parameter will always return the same result), when it evidently wasn’t.

So if XMPP nodeprep says that it should be idempotent, then why did this happen? The tl;dr is that nodeprep.prepare was using Unicode 3.2 under the hood, and when using character sets from later versions of Unicode, canonicalization got screwed up as it’s quite a tricky thing to do with Unicode characters.

Interestingly, the python 2.4 version caused the twisted code to throw an exception if the input is outside Unicode 3.2, whereas they found it passed when using Unicode data from python 2.5, so this issue was somehow introduced in Python 2.5

Unicode Case Mapping Collisions Account Takeover

Github was also affected with a unicode ‘Case Mapping Collisions’ vulnerability, which is slightly different to the above example. From the report:

A case mapping collision occurs when two different characters are uppercased or lowercased into the same character. This effect is found commonly at the boundary between two different protocols, like email and domain names.

This is similar in approach to taking over an account when there is a character filter in the username registration, removing specific characters (such as ‘ ‘ or %0A) after checking if the user exists already, allowing us to register as ‘a dmin’, which gets normalized to ‘admin’, giving us access to this account.

An example:

'ß'.toLowerCase() // 'ss'

'ß'.toLowerCase() === 'SS'.toLowerCase() // true

// Note the Turkish dotless i

'John@Gıthub.com'.toUpperCase() === '[email protected]'.toUpperCase()So by registering John@Gıthub.com, it was possible to take over the account of [email protected]. Neat!

This is an (apparently) exhaustive list of all Unicode collisions, from Wisdom:

Uppercase

| Char | Code Point | Output Char |

|---|---|---|

| ß | 0x00DF | SS |

| ı | 0x0131 | I |

| ſ | 0x017F | S |

| ff | 0xFB00 | FF |

| fi | 0xFB01 | FI |

| fl | 0xFB02 | FL |

| ffi | 0xFB03 | FFI |

| ffl | 0xFB04 | FFL |

| ſt | 0xFB05 | ST |

| st | 0xFB06 | ST |

Lowercase

| Char | Code Point | Output Char |

|---|---|---|

| K | 0x212A | k |

It should also be possible to find identical Unicode characters using Compart. There is almost definitely a scripted approach somewhere out there that I haven’t found, so please let me know via twitter if you know any! @303sec

Bypass ASP.NET Cross-Site Scripting Filter with Unicode Normalization

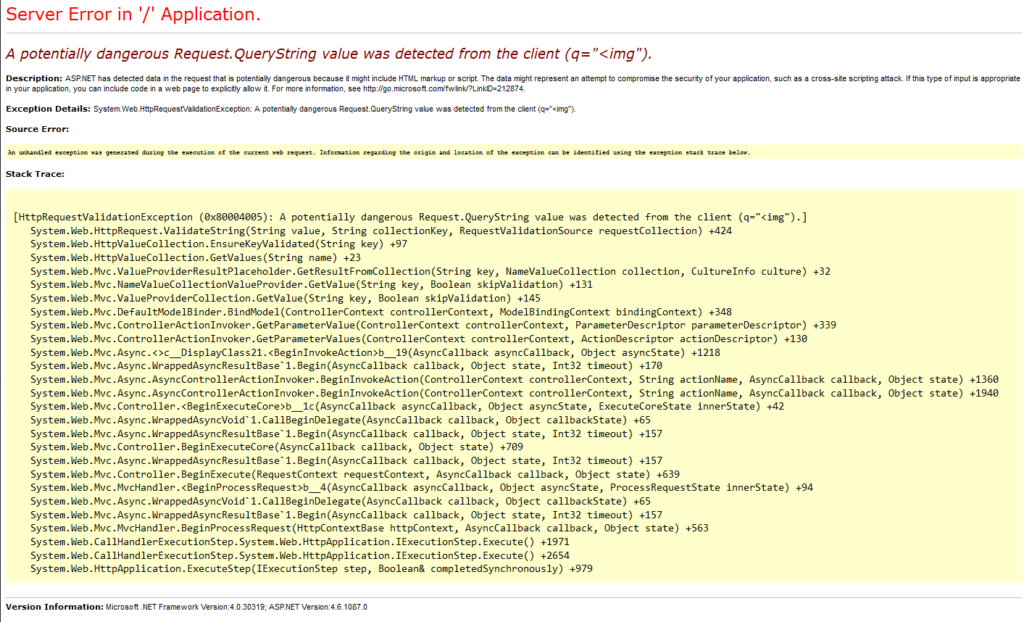

Have you ever been on a pentest and tried using an XSS payload only to get this error response?:

This is the ASP.NET Request Validator, and I hate it. It’s a pretty annoying feature for devs and pentesters alike, and pretty much just blocks the following:

&#

<A through to <Z (upper and lower case)

<!

</

<?I could go on and on about how this is a bad XSS filter that just gives developers a false sense of security… I mean, what about javascript:alert()? or onload=alert()? Or any DOM based XSS? … Anyway, there’s also a sneakier Unicode Normalization approach which can sometimes work for stored cross site scripting! Check out these three payloads:

<script>alert(document.domain)</script>

%EF%BC%9Cscript%EF%BC%9Ealert%28document.domain%29%EF%BC%9C%2Fscript%EF%BC%9E

%uff1cscript%uff1ealert(document.domain)%uff1c/script%uff1eThey are all the same Unicode characters – U+FF1C & U+FF1E, just with different types of URL encoding. These payloads will all work to bypass the ASP.NET filter, and if stored in an MSSQL database there is the possibility of ‘normalization’ – the Unicode characters will be effectively re-mapped to their Latin-1 equivalent, in this case our good friends < and >, and when reflected on the page will pop a lovely alert box.

Bypassing PHP Filters with Overly Long UTF Encoding

Back in the days of PHP 5, sirdarkcat found some cool bugs in which overly-long UTF characters weren’t being properly removed from PHP’s utf8_decode() function. So what even is ‘overly long UTF encoding’?

If you recall, UTF-8 is clever, and the size of a single character can change. If you’re using a basic US character set, it only takes up a little bit more space than ASCII/Latein-1, but you can also make these characters ‘bigger’ too. Consider this – ‘A’ is Code Point 65 (U+0065), or 0x41 in hex. Because it’s less than 128, it can be expressed as a one byte sequence, like ASCII… But the same character can also be expressed as a multi-byte sequence if we pad the start with zeros – 0x041 is still ‘U+0065’, as is 0x0000000041.

This issue was discussed by the Unicode Technical Committee, along with some other cool Unicode issues, and adjusted Unicode 3.1 to forbid non-shortest forms of characters. The PHP 5.2.11 issue, however, was born from the fact that PHP makes no checks on this matter.

Why is this an issue? Well, any PHP filters that remove slashes or quotes will now not be able to detect these byte sequences if UTF-8 decoded is after the check, as the characters will be seen as different URL-encoded characters:

' = %27 = %c0%a7 = %e0%80%a7 = %f0%80%80%a7

" = %22 = %c0%a2 = %e0%80%a2 = %f0%80%80%a2

< = %3c = %c0%bc = %e0%80%bc = %f0%80%80%bc

; = %3b = %c0%bb = %e0%80%bb = %f0%80%80%bb

& = %26 = %c0%a6 = %e0%80%a6 = %f0%80%80%a6

\0= %00 = %c0%80 = %e0%80%80 = %f0%80%80%80So if a PHP function was to try and remove the ‘ character, it would filter ‘ and %27. But %c0%a7 would not be seen the same character, and not filtered… allowing the utf8_decode() function to come in and decode that character into what should have been removed. Here’s a clear little code snippet directly taken from sirdarkcat’s blog:

// add slashes!

foreach($_GET as $k=>$v)$_GET[$k]=addslashes("$v");

// .... some code ...

// $name is encoded in utf8

$name=utf8_decode($_GET['name']);

mysql_query("SELECT * FROM table WHERE name='$name';");

?>Summary

There are a wealth of UTF encoding based vulnerabilities that have been found in the past, and it’s likely that due to the complexities there will be more found in the future. These vulnerabilities almost always stem from developers making incorrect assumptions around what Unicode is and how UTF-x encoding works, so from a dev perspective it is important to know the underlying mechanisms.

To me, however, this exposes a deep and unpleasant truth around security bugs – encoding issues are easy to test for, but difficult to understand. As we build complexity on top of complexity, it becomes paramount to make sure we have a deep understanding of the implications of everything we are doing, which for a developer is a maddening process of learning everything, but to an attacker it’s just seeing what happens when you register a user called ᴮᴵᴳᴮᴵᴿᴰ

References

- Spotify’s Unicode Account Takeover Vulnerability

- Various Unicode Normalization Attacks

- Bypass WAF with Unicode Compatability

- Compart Find Similar Unicode Characters

- Hacking Github with unicode dotless ‘i’

- Github description of dotless ‘i’ vulnerability

- Great Overlong UTF Explanation

- Unicode Security Considerations

- https://stackoverflow.com/questions/2200788/asp-net-request-validation-causes-is-there-a-list

- https://appcheck-ng.com/unicode-normalization-vulnerabilities-the-special-k-polyglot/

- https://stackoverflow.com/questions/912811/what-is-the-proper-way-to-url-encode-unicode-characters

- https://patzke.org/files/OWASP_Cologne-20141030-Unicode.pdf

- https://www.joelonsoftware.com/2003/10/08/the-absolute-minimum-every-software-developer-absolutely-positively-must-know-about-unicode-and-character-sets-no-excuses/

- https://security.stackexchange.com/questions/48879/why-does-directory-traversal-attack-c0af-work